A guest post by Paul Hiemstra. ———— Fortran and C programmers often say that interpreted languages like R are nice and all, but lack in terms of speed. How fast something works in R greatly depends on how it is implemented, i.e. which packages/functions does one use. A prime example, which shows up regularly on […]

A guest post by Paul Hiemstra.

————

Fortran and C programmers often say that interpreted languages like R are nice and all, but lack in terms of speed. How fast something works in R greatly depends on how it is implemented, i.e. which packages/functions does one use. A prime example, which shows up regularly on the R-help list, is letting a vector grow as you perform an analysis. In pseudo-code this might look like:

1

2

3

4

5

| dum = NULL

for(i in 1:100000) {

# new_outcome = ...do some stuff...

dum = c(dum, new_outcome)

} |

The problem here is that dum is continuously growing in size. This forces the operating system to allocate new memory space for the object, which is terribly slow. Preallocating dum to the length it is supposed to be greatly improves the performance. Alternatively, the use of apply type of functions, or functions from plyr package prevent these kinds of problems. But even between more advanced methods there are large differences between different implementations.

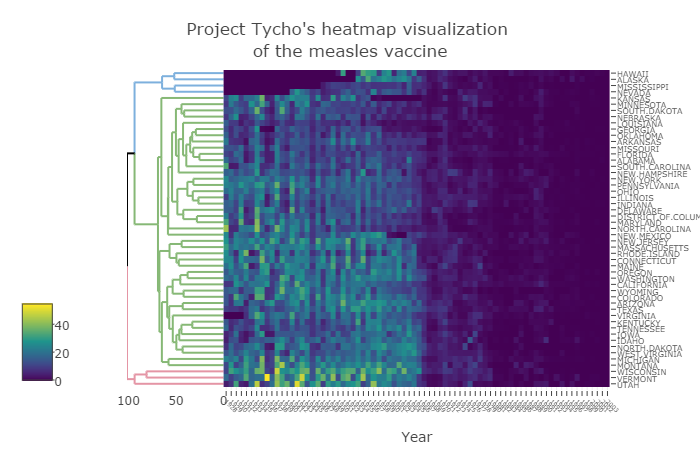

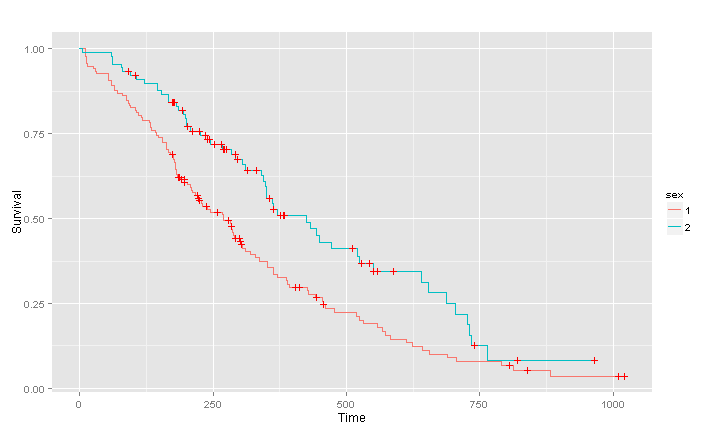

Take the next example. We create a dataset which has two columns, one column with values (e.g. amount of rainfall) and in the other a category (e.g. monitoring station id). We would like to know what the mean value is per category. One way is to use for loops, but I’ll skip that one for now. Three possibilities exist that I know of: ddply (plyr), ave (base R) and data.table. The piece of code at the end of this post compares these three methods. The outcome in terms of speed is:

(press the image to see a larger version)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

| datsize noClasses tave tddply tdata.table

1 1e+05 10 0.091 0.035 0.011

2 1e+05 50 0.102 0.050 0.012

3 1e+05 100 0.105 0.065 0.012

4 1e+05 200 0.109 0.101 0.010

5 1e+05 500 0.113 0.248 0.012

6 1e+05 1000 0.123 0.438 0.012

7 1e+05 2500 0.146 0.956 0.013

8 1e+05 10000 0.251 3.525 0.020

9 1e+06 10 0.905 0.393 0.101

10 1e+06 50 1.003 0.473 0.100

11 1e+06 100 1.036 0.579 0.105

12 1e+06 200 1.052 0.826 0.106

13 1e+06 500 1.079 1.508 0.109

14 1e+06 1000 1.092 2.652 0.111

15 1e+06 2500 1.167 6.051 0.117

16 1e+06 10000 1.338 23.224 0.132 |

It is quite obvious that ddply performs very bad when the number of unique categories is large. The ave function performs better. However, the data.table option is by far the best one, outperforming both other alternatives easily. In response to this, Hadley Wickham (author of plyr) responded:

This is a drawback of the way that ddply always works with data frames. It will be a bit faster if you use summarise instead of data.frame (because data.frame is very slow), but I’m still thinking about how to overcome this fundamental limitation of the ddply approach.

I hope this comparison is of use to readers. And remember, think before complaining that R is slow  .

.

Paul (e-mail: [email protected])

ps This blogpost is based on discussions on the R-help and manipulatr mailing lists:

– http://www.mail-archive.com/[email protected]/msg142797.html

– http://groups.google.com/group/manipulatr/browse_thread/thread/5e8dfed85048df99

R code to perform the comparison

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

| library(ggplot2)

library(data.table)

theme_set(theme_bw())

datsize = c(10e4, 10e5)

noClasses = c(10, 50, 100, 200, 500, 1000, 2500, 10e3)

comb = expand.grid(datsize = datsize, noClasses = noClasses)

res = ddply(comb, .(datsize, noClasses), function(x) {

expdata = data.frame(value = runif(x$datsize),

cat = round(runif(x$datsize, min = 0, max = x$noClasses)))

expdataDT = data.table(expdata)

t1 = system.time(res1 <- with(expdata, ave(value, cat)))

t2 = system.time(res2 <- ddply(expdata, .(cat), mean))

t3 = system.time(res3 <- expdataDT[, sum(value), by = cat])

return(data.frame(tave = t1[3], tddply = t2[3], tdata.table = t3[3]))

}, .progress = 'text')

res

ggplot(aes(x = noClasses, y = log(value), color = variable), data =

melt(res, id.vars = c("datsize","noClasses"))) + facet_wrap(~ datsize)

+ geom_line() |